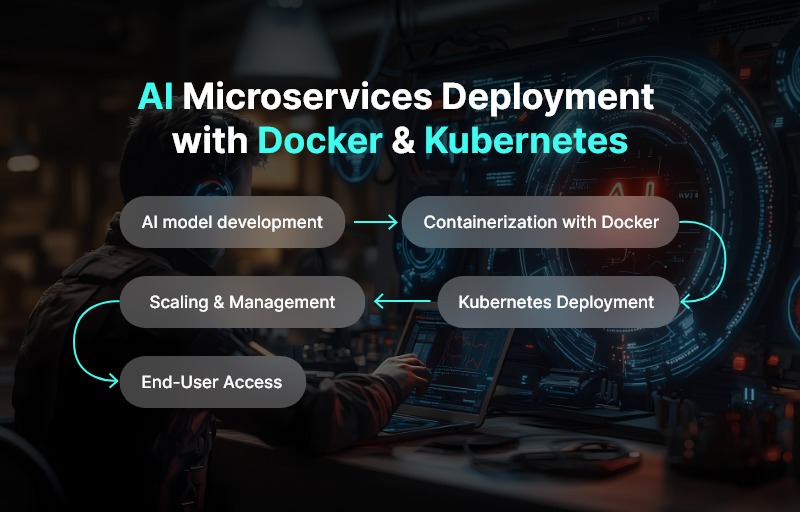

Artificial Intelligence (AI) applications are not just about training models anymore. Real-world AI systems involve multiple models, APIs, data pipelines, and monitoring tools that must work together smoothly. If you package everything into one giant monolithic app, it quickly becomes unmanageable.

That’s where Docker and Kubernetes shine.a

- Docker helps you containerize your AI services.

- Kubernetes (K8s) orchestrates those containers at scale.

In this guide, we’ll break down how to deploy AI microservices with Docker and Kubernetes — step by step.

Why AI Needs Microservices

AI workloads are resource-heavy and dynamic. Microservices architecture helps because:

- Separation of concerns – Model training, preprocessing, inference, and monitoring can run as separate services.

- Scalability – Scale the inference service independently of data preprocessing.

- Flexibility – Swap out models without touching the whole system.

- Resilience – If one microservice fails, the rest keep running.

- Continuous delivery – Update a model or API without downtime.

Example: An AI-powered recommendation system might run:

- A user behavior tracking microservice

- A recommendation model inference service

- A logging/analytics microservice

- An API gateway to tie it all together

Introduction to Docker and Kubernetes

Docker: Your AI Packaging Tool

Docker creates containers, which bundle your application code, dependencies, and configurations into a portable unit. For AI:

- Package models with frameworks like TensorFlow or PyTorch.

- Run the same image on local, staging, or production environments.

- Share images easily via Docker Hub or private registries.

Kubernetes: Your AI Orchestrator

Kubernetes manages clusters of containers. It helps you with:

- Scaling – add/remove replicas based on traffic.

- Self-healing – restarts failed containers automatically.

- Load balancing – distributes requests across services.

- Rolling updates – update services without downtime.

Microservices Architecture for AI

In AI-driven systems, a microservices design might look like this:

- Data Preprocessing Service: Cleans, validates, and formats data.

- Model Training Service: Trains and updates ML models.

- Model Inference Service: Handles real-time predictions.

- API Gateway: Single entry point for external clients.

- Monitoring Service: Logs metrics, detects drifts, monitors resource usage.

Each service runs in its own Docker container and is managed by Kubernetes deployments.

Kubernetes Objects for Microservices

When deploying microservices, you’ll work with these Kubernetes objects:

- Pod: Smallest deployable unit; usually runs one container.

- Deployment: Manages pods, ensures the desired state.

- ReplicaSet: Ensures the correct number of pod replicas.

- Service: Exposes pods internally or externally.

- ConfigMap: Stores configuration as key-value pairs.

- Secret: Stores sensitive data (API keys, credentials).

- Ingress: Routes external HTTP/S traffic to your service.

- PersistentVolume (PV) / PersistentVolumeClaim (PVC): Stores datasets and models.

Deploying a Simple Application

Let’s deploy an AI inference microservice (Flask + PyTorch model).

Step 1: Containerize with Docker

Create a Dockerfile:

WORKDIR /app# Install dependencies

COPY requirements.txt .

RUN pip install -r requirements.txt# Copy model and app

COPY . .EXPOSE 5000

CMD [“python”, “app.py”]

Build and push the image

docker push username/ai-service

Step 2: Create a Kubernetes Deployment

deployment.yaml:

kind: Deployment

Metadata:

name: ai-serviceSpec:replicas: 2

Selector:

matchLabels:

app: ai-services

Template:

Metadata:

Labels:

app: ai-service

spec:

Containers:

– name: ai-service

image: username/ai-service:latest

Ports:

– containerPort: 5000

Apply it:

Step 3: Expose the Service

service.yaml:

kind: Service

metadata:

name: ai-service

spec:

selector:

app: ai-service

ports:

– protocol: TCP

port: 80

targetPort: 5000

type: LoadBalancer

Apply it:

kubectl apply -f service.yaml

Verifying the Deployment

Check if pods are running:

Check service details:

Hit the external IP (or NodePort for local clusters):curl http:///predict

Accessing the Application

Minikube:

Cloud (EKS, GKE, AKS): Use the EXTERNAL-IP of the LoadBalancer.Ingress: Configure for domain-based routing with TLS.

Scaling the Application

Scale manually:

Enable autoscaling:

Updating the Application

For a new model or API change, update the image:

docker push username/ai-service:v2

Update the deployment:

ai-service=username/ai-service:v2

Kubernetes will do a rolling update with zero downtime.

Conclusion

Deploying AI microservices with Docker and Kubernetes isn’t as complicated as it looks. By breaking your AI workloads into modular microservices, containerizing them with Docker, and orchestrating with Kubernetes, you gain:

- Flexibility

- Scalability

- High availability

- Easier updates

This approach is the backbone of modern AI infrastructure—whether you’re deploying chatbots, recommendation engines, fraud detection systems, or computer vision apps.

FAQs

1. Why not just deploy AI as a monolithic app?

Because AI systems need flexibility. Microservices allow independent scaling, easier debugging, and faster updates.

2. Can I run AI microservices on local Kubernetes?

Yes. Use Minikube, Kind, or Docker Desktop for local clusters. For production, use managed services like GKE, EKS, or AKS.

3. Do AI workloads require GPUs in Kubernetes?

Not always. Inference can run on CPUs. But for heavy training or high-throughput inference, you can attach GPU nodes with Kubernetes device plugins.

4. How do I handle large datasets for AI microservices?

Use Kubernetes PersistentVolumes (PV) and cloud storage options (e.g., S3, GCS). Mount them into pods for data access.

5. What’s the best way to update AI models in production?

- Package the new model into a container

- Push the image to a registry

- Update deployment in Kubernetes (rolling updates ensure zero downtime)

6. How do I monitor AI microservices?

Use Prometheus + Grafana for metrics, EFK stack (Elasticsearch, Fluentd, Kibana) for logging, and model monitoring tools for drift detection.

7. Is Kubernetes overkill for small AI projects?

If you’re running a single model on one server, yes. But if you need scalability, resilience, and automation, Kubernetes is worth it.